This cluster was led by the two of us

as well as Adam Menges of Lobe.ai and Microsoft,

and by Samantha Walker of SOM.

This work was supported by Lobe.ai,

by SOM,

and by the SG organization.

Good afternoon.

I'm Kat Park,

emerging technology leader at SOM

and this is Kyle Steinfeld,

Associate Professor of Architecture

at UC Berkeley.

We'd like to talk today

about some work related to a cluster

we helped to lead

at the 2018 Smart Geometry Conference

held in Toronto.

This cluster was led by the two of us

as well as Adam Menges of Lobe.ai and Microsoft,

and by Samantha Walker of SOM.

This work was supported by Lobe.ai,

by SOM,

and by the SG organization.

While we will present the work

completed by this workshop cluster momentarily,

we'd like to begin with a brief discussion

of the thinking that produced it.

This was a year ago,

which feels like a decade

considering how intense and quickly

things have been progressing in the ML world.

A year ago,

we came up with a strategy

to test one model

of integrating ML

into creative computational architectural design.

It was one model,

which quickly forked

into other directions during the workshop

as well as by others

in the field during the past year.

As we have seen

in previous moments

in the history of design technology,

the early stages

in the adoption of a new technology

is often marked

by intense periods

of experimentation

and disruptions of existing technical and social frameworks.

Clarity is not easy to come by in such moments;

Those who seek certainty in these times

are quickly humbled.

---------------------

While we will present the work completed by this workshop cluster momentarily, we'd like to begin with a brief discussion of the thinking that produced it.

In short,

this cluster sought to test one model

for the integration of Machine Learning

and Creative Computational Architectural Design:

a model that has continued to bear fruit

over the past year.

However,

it should be noted that

we tested just one model

for this integration.

Given the nature of our current moment

of intense interest

and impressive progress

in Artificial Intelligence generally,

we can be sure that this one model

for integrating ML and design practice

will not stand alone.

As we have seen in previous moments

in the history of design technology,

the early stages in the adoption of a new technology

is often marked by

intense periods of experimentation

and disruptions of existing technical and social frameworks.

Clarity is not easy to come by in such moments;

Those who seek or who claim to have found certainty

in these times are quickly humbled.

After briefly outlining our framework

for the application of Machine Learning to design,

we'll offer a number of examples

that both demonstrate its usefulness and its limits.

These examples

are drawn primarily

from the proposals of participants in the workshop

but also from other relevant projects

that have been developed independently since SG.

We'll begin with Kyle Steinfeld

who will briefly outline the framework

that guided our work.

---------------------

And so,

in the interest of humility,

after briefly outlining our framework

for the application of AI to design,

we'll offer a number of examples

that both demonstrate its usefulness and its limits.

These examples are drawn primarily from

the proposals of participants in our cluster

(as described in our paper)

but also from other relevant projects

that have been developed independently.

[KYLE]

It will come as no surprise

to anyone in this room

that machine learning

has made rapid gains

in recent years.

From left to right:

Goodfellow et al (2014), Radford et al (2015), Liu and Tuzel (2016), Karras et al (2017), Karras et al (2018)

Adapted from General Framework for AI and Security Threats

As clearly demonstrated by this sequence of images.

For those not familiar,

what might not be readily apparent

is that each of these images was generated by a computer,

not as the manipulation of an existing photo,

but rather as an entirely new form

that matches an existing pattern of known forms

drawn from experience.

These are images that the computer has "drawn", on its own.

Synthetic Faces generated by StyleGAN.

Images generated by thispersondoesnotexist.com

The thought of being able to train a computer

to synthesize images,

to "draw" pictures

with such fidelity,

... this is more than a little jarring.

However,

taken from a creative authorship point of view,

it is also quite inspiring.

At least it is to me...

I first studied architecture in the 1990s,

when drawing as an activity was in transition

from analog things,

like pencil and vellum,

to basic digital media

which seemed at the time

to be very different.

at that time,

the mode of authorship

enabled by analog drawing tools

appeared to be a straightforward affair.

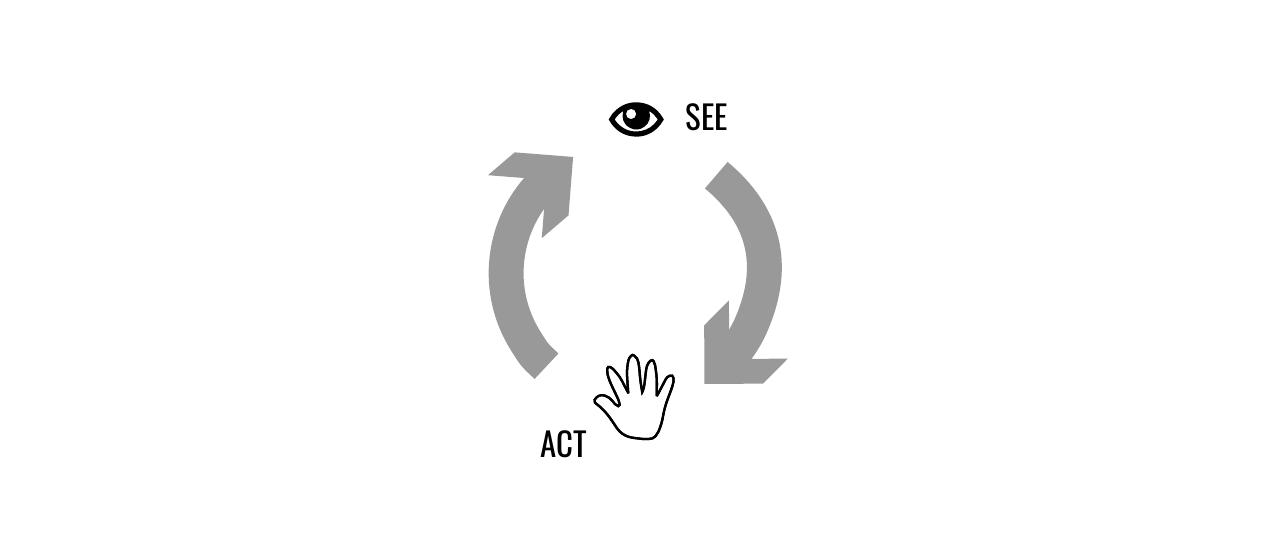

this is a mode

that remains well described

by what I now understand as

the 'reflective loop' of design activity

despite all the changes brought about

by the digital transformation

of the early 2000's

this understanding of design activity

as an iterative cycle of making and seeing

basically held true

even as computers offered new forms of acting

from working indirectly on the shape of curves via control points

to assembling ornate chains of logic via scripting

this model more-or-less held its ground,

and the "seeing" that is so crucial to design,

was left to us humans.

in the late 2000s,

the advent of parametric modeling

changed things slightly

a new form of design action

found its way into practical application

one that formalized

not only computational modes of acting,

but also replaced

the direct visual evaluation of humans

with the formalized evaluation routines

of machines.

this design method,

novel in the early 2000s,

has come to be known as

"generative design"

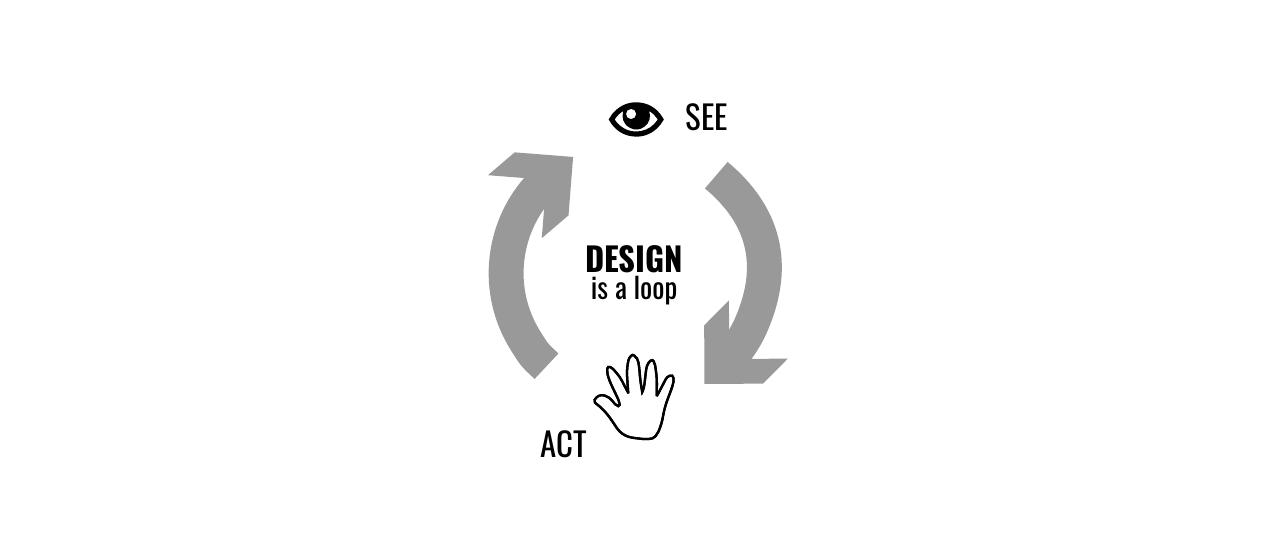

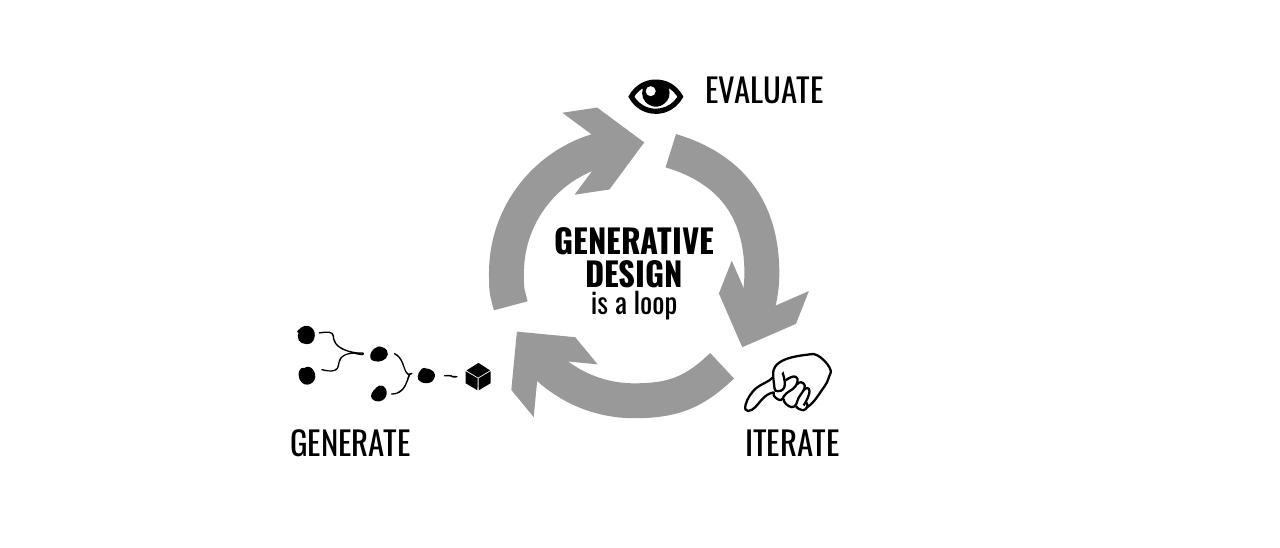

Generative Design in architecture

is widely understood

as a three-stage cycle:

in the generation step,

new potential forms are proposed

using a computational process.

in the evaluation step,

the performance of these forms are quantified

again relying on computational analysis

rather than the subjective eye of the designer

and in the iteration step,

parameters of generation are manipulated

to find better results.

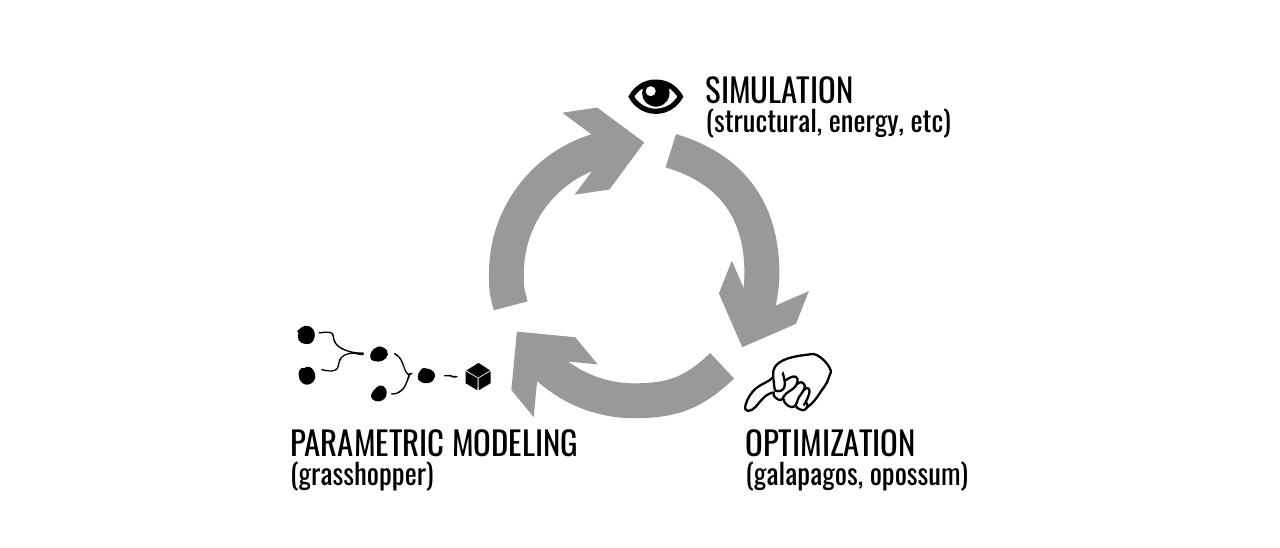

This approach typically employs

a combination of

parametric,

simulation,

and optimization tools.

this is where the contribution of the cluster,

in synthesizing ML

and Generative Design,

comes into play.

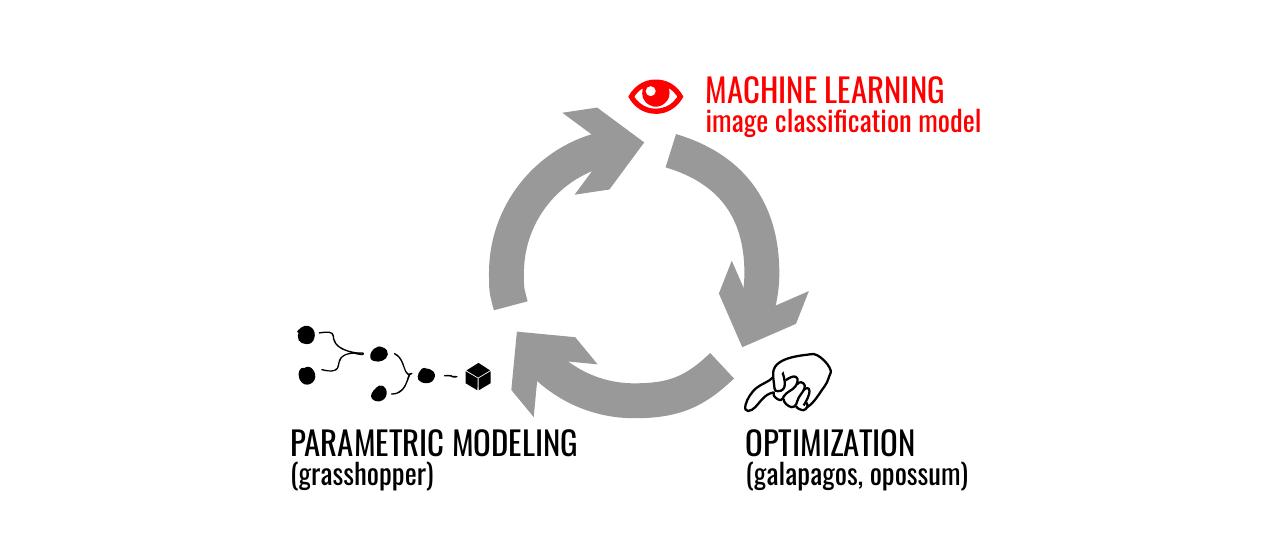

This cluster proposed a modest modification

of the generative design process.

We swap out the evaluation step of the cycle

which is typically the domain

of architectural simulation

for a machine learning process,

specifically

a neural net

trained on image classification tasks

of one form or another

So, for the uninitiated,

what is ML?

and how can it participate this process?

While we'll likely hear a lot about ML today,

I'll take this chance to offer

my favorite definition:

This process is different enough

from traditional methods for evaluation,

as to warrant an adjustment

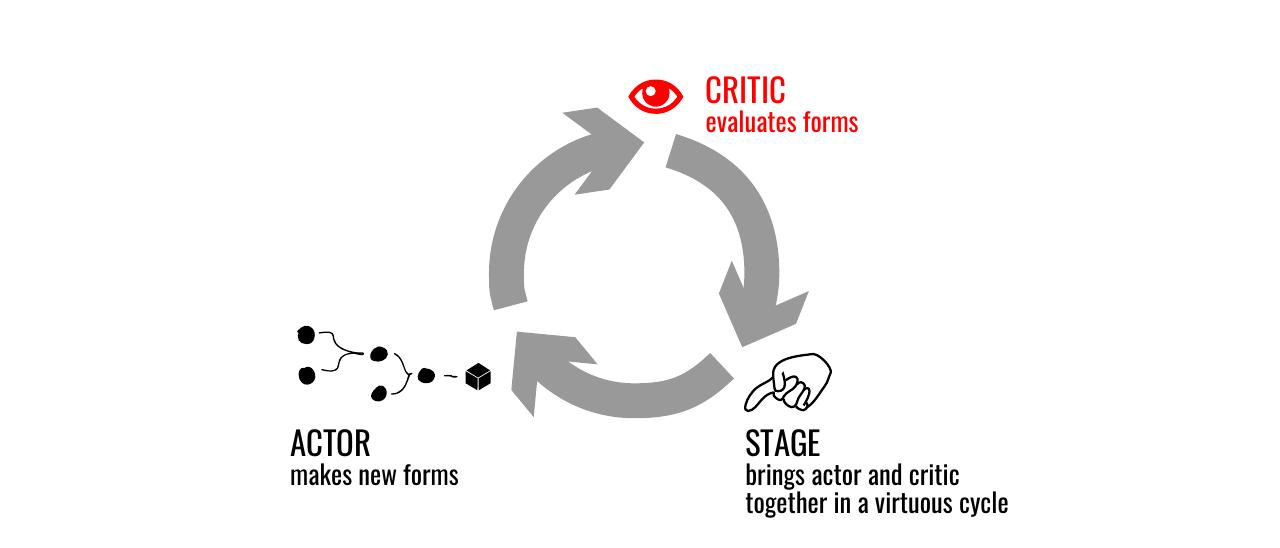

of the **terms** of generative design.

And so,

our cluster re-defines the generative design cycle

as: actor, critic, stage.

As before,

an actor generates new forms,

and describes them

in a format preferred by ML

This issue of format

is a crucial one.

For a variety of reasons,

the most developed ML models

relevant to architectural design

operate on images.

For this reason,

we are content for now

to insist that our actor

re-present architectural form

as image

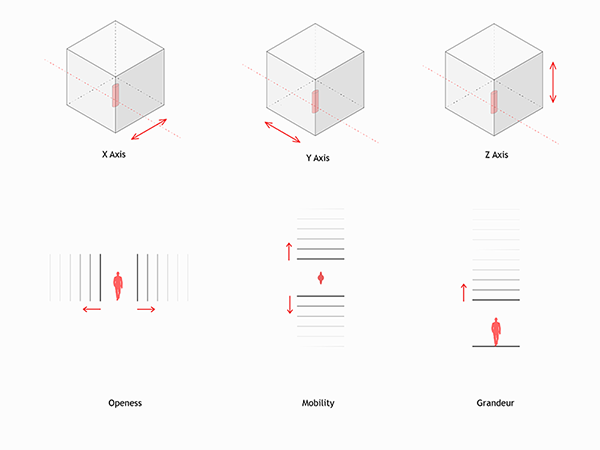

And so,

one important contribution of the cluster

involves the developing of methods

for **describing architectural forms and spaces as images**

in ways that allow the salient qualities

of form and space

to be captured by our critic

Moving on the evaluation step,

we define a critic

as a process that evaluates forms

based on patterns and types

learned from experience.

I should emphasize that

the importance of training a critic

should not be underestimated.

This is an important new locus

of creative action in design,

An important new form of subjectivity.

To cede this space

to existing processes and models

would be a huge loss for architectural design.

To secure

this new locus of subjectivity,

and to offer participants

the capacity to train their own models,

we partnered with a company called Lobe.ai

which sponsored our cluster

Lobe provided an essential platform

for training ML models

relevant to architectural evaluation.

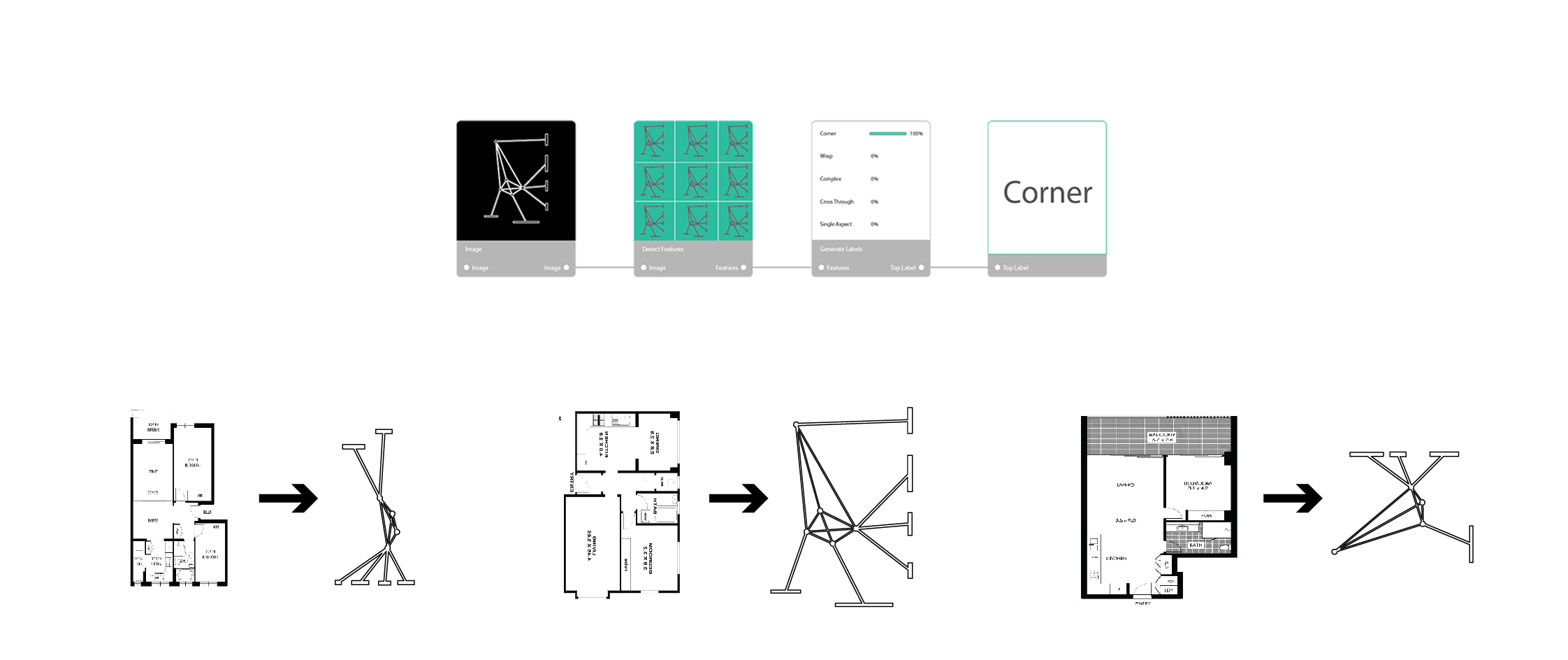

As we see here,

Lobe is a web-based visual tool for

constructing models

for training them

and for allowing them to serve up predictions

An anlaogy might be helpful:

as Grasshopper is to Rhino

so Lobe is to Tensorflow

Finally, we define a stage.

The stage is the system

which brings together actor and critic,

allowing an actor

to progressively improve her performance.

Here,

traditional optimization techniques are employed,

which I'm sure all of us in the room

hold a rough understanding of.

So,

to illustrate how these pieces go together,

we see in this animation an actor and critic

coming together on a stage.

Here,

a critic is trained on 3d models that describe

typologies of detached single-family homes

cape cod house

shotgun

dogtrot

etc..

The job of the critic

is to evaluate the performance of an actor,

In this case,

our actor generates house-like forms,

such as the ones we see flashing by

in this animation.

These two intelligences

are brought together in an optimization,

wherein the actor

generates new potential house forms,

these forms are scored by the critic

in terms of how much they resemble

a known type of house,

such as the California Eichler style

shown here

and then the process iterates

in a classic optimization.

and so,

by modestly adjusting

the nature of the evaluation step

of the generative design process,

we find a way forward

from optimizing for **quantifiable objectives**,

as is typical in generative design

to optimizing for more **qualitative objectives**,

such as architectural typology

or spatial experience

These qualitative objectives

need not be strictly rational.

They might be more whimsical,

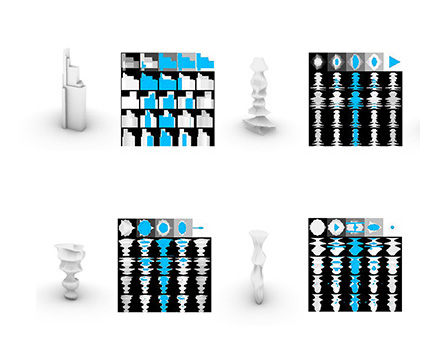

as demonstrated by the Actor-Critic relationship

shown in this animation.

Here,

an actor that generates balloon-like forms

separately attempts to please two critics:

one that prefers zucchinis,

and a second that prefers bananas.

So this is the framework

that was introduced to cluster participants,

who, over the course of the four-day workshop,

probed

extended

exploded and re-assembled

this framework toward a variety of individual ends.

I'm going to talk about some of the applications of this framework that was explored at the workshop.

One of the most interesting value ML offers to the design world is its ability to embody Quali - things we can't quite describe with words (and therefore script into explicit code) and as designers we are constantly thinking imagistically and qualitatively.

Some of the workshop participants wanted to craft an optimization that speaks to this experiential concept, such as what it feels like to occupy various types of forests. This particular project here extended our isovist representation to attempt to capture the spatial experience of scale.

There are experiments by others who used ML to capture the intangible - like styles of art and architecture. These are a bit easier since they are artists with distinct styles and we have numerous data but I think the next step is to try to embody the critique that designers engage in, such as what makes a space work or a plan that has a nice flow.

the work we see here

took a deep dive into feature detection

in images of floor plans

with the goal of

eventually generating these graphics

artificially

Stanislas Chaillou 2019

This is a clear initial application of ML in design that many are attempting in architecture - but since the image of our plans are a representation of the final product, not actually the final product in the way art or music is, there is an extra step necessary to make ML actually relevant here. The symbolic representations we use in plans is coded as colors in this example from GSD which allows the ML algorithms to translate those colors to different objects or spaces.

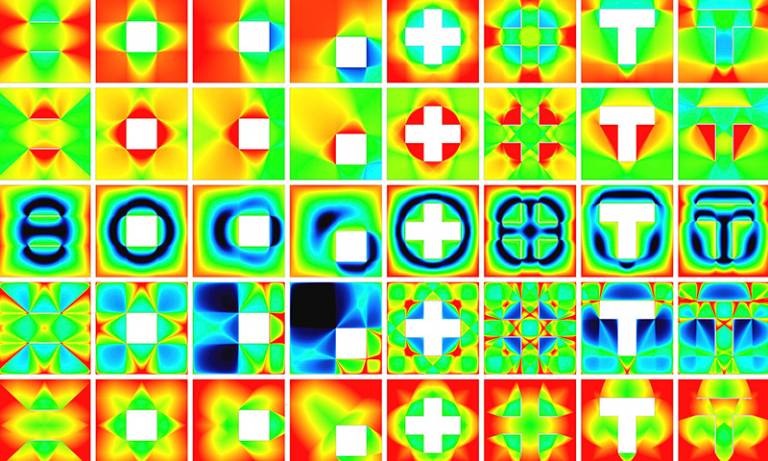

Some participants were interested in

focusing just on a configuration of an actor,

pitting a parametric model that they made

against an existing critic,

in this case one that was trained to predict

the performance of tower forms under wind loads

Replacing a time-consuming or costly simulation

with a trained ML model

is another popular direction that people are looking into

here you see CFD analysis results that were used as training sets but there are others doing the same with EUI prediction.

These are all simulations that have a large impact on the design in practice, especially early on but take longer to generate so by the time results are returned, the designers have moved on.

With trained Machine Learning model an API call away, people in practice are trying to reduce this time factor hurdle.

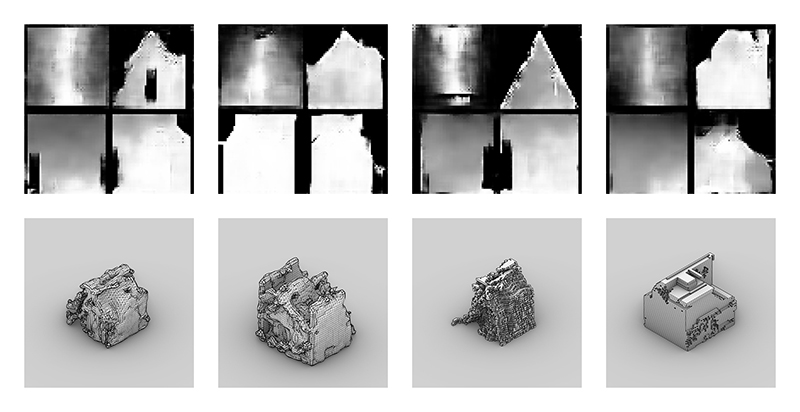

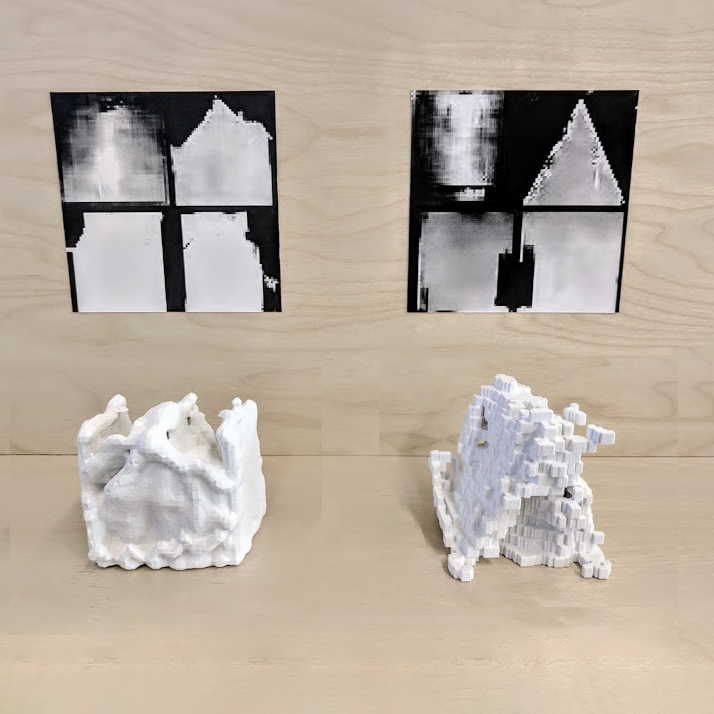

to conclude,

another group was interested

in better understanding

how our critics "see" architectural form

and how this is different

from the way we see it.

to explore this question,

they "reverse engineered"

our raster representations of form

back to three-dimensional form

which validated that what the ML algorithm sees

is actually quite similar to what we see.

this work enabled an entirely new approach based on a Generative Adversarial Network or "GAN"

In short, a GAN replaces not just the evaluation step, but the generative step as well, such that actor and critic are both ML models.

Kyle Steinfeld, 2018

Shown at the NeurIPS 2018 Machine Learning for Creativity and Design

www.aiartonline.com/design/kyle-steinfeld/