This studio seeks to prototype tools related to a vision for machine-augmented architectural design, and seeks to develop a new set of creative practices that will be enabled by it.

Kyle Steinfeld, 2020

This studio seeks to prototype tools related to a vision for machine-augmented architectural design, and seeks to develop a new set of creative practices that will be enabled by it.

Kyle Steinfeld, 2020

This talk presents:

I am not an imaginary person.

I am an Associate Professor of Architecture at UC Berkeley, where I've been teaching for just about ten years.

My work centers on the dynamic relationship between the creative practice of design and computational design methods.

I have found that the interplay between technologies of design and the culture of design practice comes into sharp contrast at intense moments of technological or social change. In my career as a student and a scholar of architectural design, I have witnessed two such intense moments:

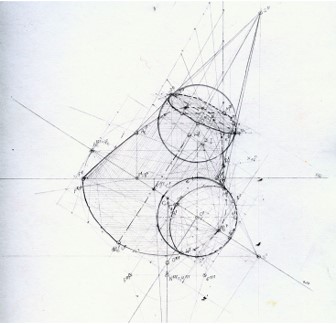

The first was in the mid-1990s, when, as an undergraduate student of architecture, I was a part of a transitional generation that saw the shift from analog to digital representation.

The second was in the early-2000s, when, as a graduate student and young professional, I saw the adoption of computational techniques in design, such as scripting and parametric modeling.

These moments are what the historian Mario Carpo has called the "two digital turns". Based on my experience, It seems to me that we are at the cusp of a third.

I think so based on what has been happening across a range of creative fields.

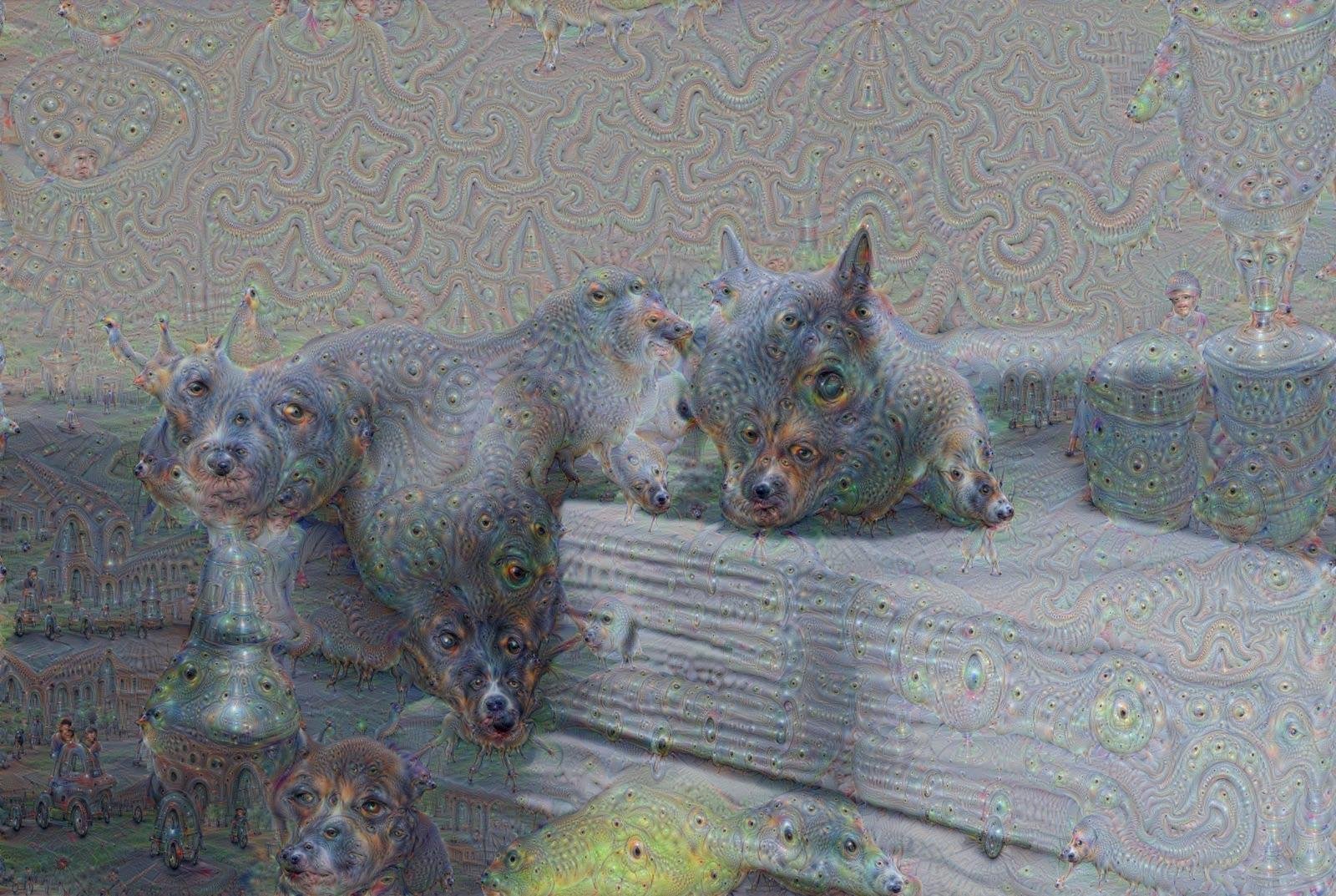

Triggered by new advances in machine learning, and the development of methods for making these advances visible and accessible to a wider audience, the past five years has seen a burst of renewed interest in generative practices across the domains of fine art, music, and graphic design. The motivation of this studio is to better understand what ramifications might these methods might hold for architectural design.

I'll offer here a quick overview of the short history of these tools in creative practice, and will highlight three precedent projects that I find particularly relevant.

Reddit user swifty8883. June 16, 2015

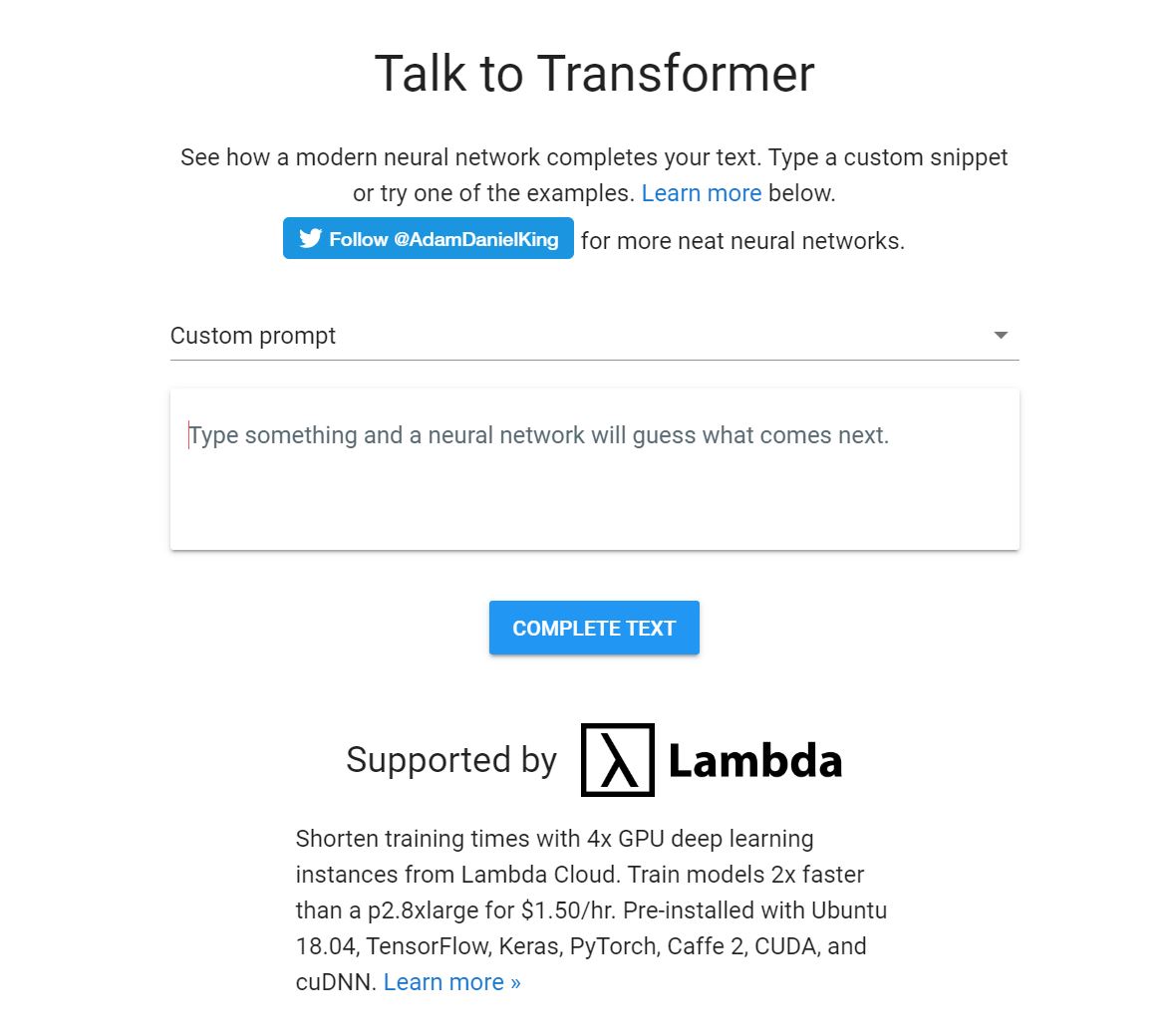

Here, the drawings of an author are augmented with predictions of what is to come next. The model underlying this tool was trained using Google Quickdraw.

The same model as in the previous slide, with this visualization showing many possible futures for the sketch. The model underlying this tool was trained using Google Quickdraw.

Gordon and Roemmele, USC 2015

An application that helps writers by generating suggestions for the next sentence in a story as it being written, based on a model trained on a corpus of twenty million English-language stories.

Here, an ML model has been trained to understand the transformation from a line-drawing of a cat to a photographic image of a cat. Once training is complete, this model will attempt to create a cat-like image of any given line drawing.

Neil Bickford, 2019

NVIDIA, 2019

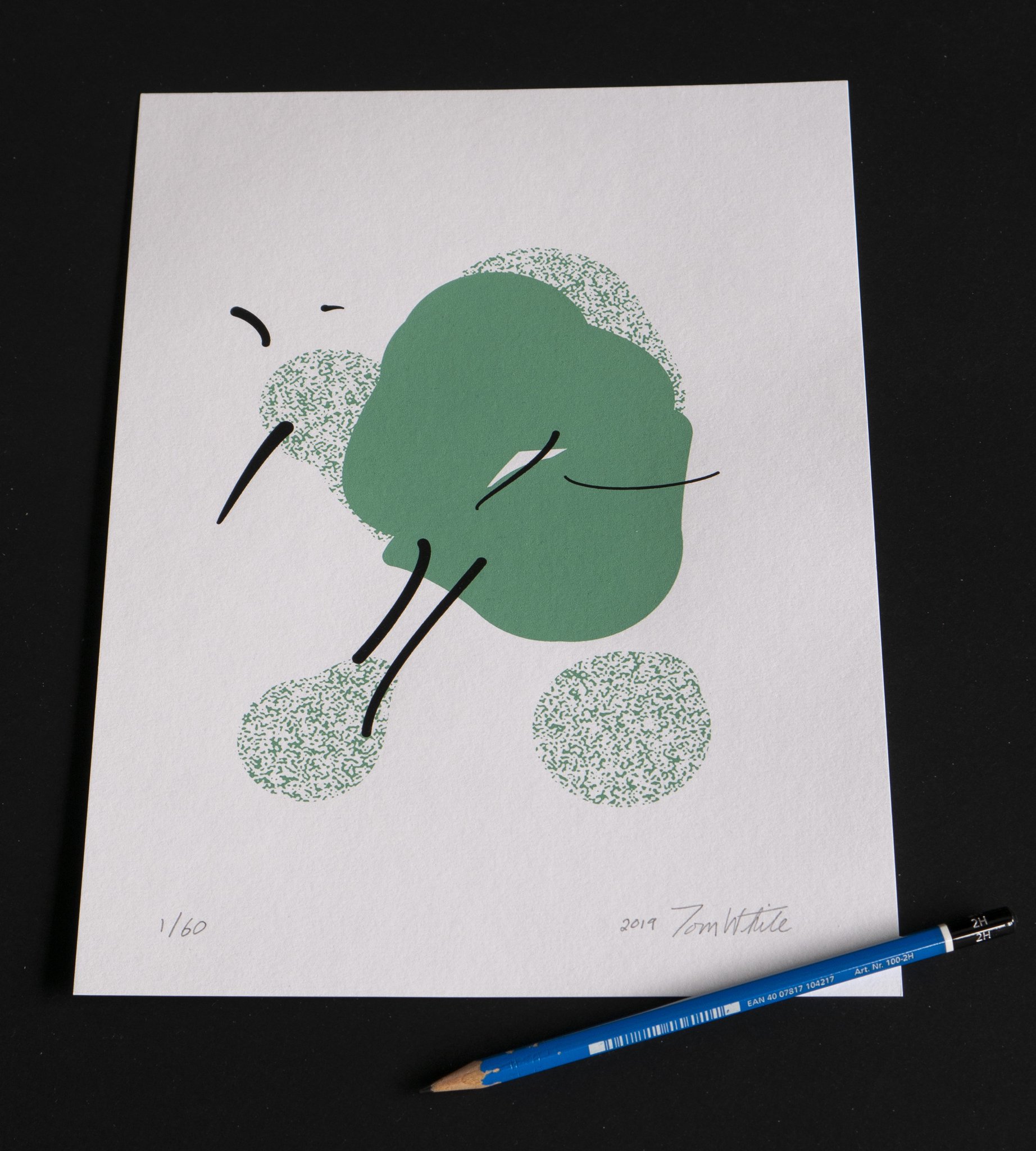

Tom White is an artist who is interested in representing "the way machines see the world". He uses image classification models to produce abstract ink prints that reveal visual concepts.

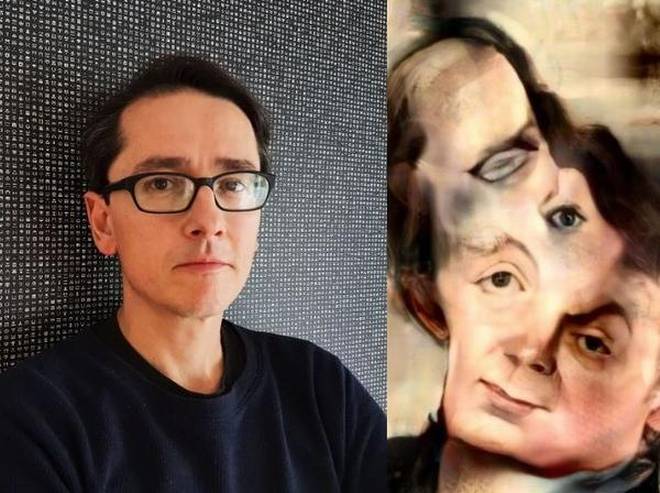

Scott Eaton is a anatomical artist who uses custom-trained transfer models as a "creative collaborator" in his figurative drawings. His large-scale piece "Fall of the Damned" was the inspiration for the Sketch2Pix tool developed for this course.

Scott Eaton, 2019

Perhaps the most ambitious of the precedents I'll show today comes from the tech-forward band YACHT, who produced a concept album titled "Chain Tripping" in which AI played a role at every step. This included:

The machine-generation of melodies and beats based on the band's previous recordings

The machine-generation of lyrics and song titles from the band's previous albums

The collaboration with AI artists in the design of the album cover and promotional materials.

In a sentiment we hope to emulate in this studio, Claire Evans states: "We wanted to understand it. We knew that the best way to do that is to make something."

Tom White, 2019

Nominated for the Best Immersive Audio Album Grammy

YACHT, 2020

Every song on the album was composed using Magenta's MusicVAE model, all lyrics were generated by an LSTM trained by Ross Goodwin, and parts were performed on Magenta and Creative Lab's NSynth Super instrument. Visual aspects of the album were also made using generative neural networks, including the album cover by Tom White's adversarial perception engines and GAN-generated promotional images by Mario Klingemann. The videos include sequences generated with pix2pix and fonts from SVG-VAE using implementations that are available in Magenta's GitHub repo.

"We wanted to find a way to interrogate technology more deeply," explains Claire L. Evans, one-third of the pop group YACHT. "From the ground up," adds her partner and YACHT founder Jona Bechtolt. The group--rounded out by longtime collaborator Rob Kieswetter--would know: their seventeen-year career has been marked by a series of conceptual stunts, experiments, and attempts to use technology "sideways." Even the band's name speaks to this: YACHT is an acronym for Young Americans Challenging High Technology.

Chain Tripping is their seventh album and third with DFA Records. Recorded between the band's home in Los Angeles and Marfa, Texas, the ten-song collection marks a shift in the group's relationship with technology. Rather than trying to comment on existing platforms from within their own filter bubble, the band stripped their process down and rebuilt it using a technology entirely new to them--Artificial Intelligence, and more specifically, machine learning.

In order to compose Chain Tripping, YACHT invented their own AI songwriting process, a journey of nearly three years. They first tried to discover any existing YACHT formulas by collaborating with engineers and creative technologists to explore their own back catalogue of 82 songs using machine learning tools. Eventually they created their own working method, painstakingly stitching meaningful fragments of plausible nonsense together from extensive, seemingly endless fields of machine-generated music and lyrics, themselves emerging from custom models created with the help of generous experts in neural networks, deep learning, and AI.

Koki Ibukuro, 2019

Jeremy Graham, 2019

Erik Swahn, 2019

Stanislas Chaillou 2019

Given the speculative nature of the course, rather than privilege the development of a singular design project, the studio proceeds through a series of lightly-connected "propositions" that explore the potential role of an AI tool in design. These are collected and shown in an exhibit of work. The latter portion of the semester is dedicated to individual student projects, understood as individual theses, that seek to apply AI methods to a student-defined design problem.

We will proceed in short bursts, and we will be patient in allowing small questions to aggregate into larger and more elaborate proposals.

Each week, we read something, make something, and present something for public display.

While the role of each AI tool we encounter will differ - at times acting as an assistant, a critic, or a provocateur - each proposition will offer the studio a chance to better know the underlying technology and how it might figure in a larger process of design.

While we will obviously be primarily driven by investigating new design methods, we recognize that such an investigation would benefit from the details of a unifying architectural design problem.

What is an appropriate test bed for these technologies of the artificial?

Thematically we will focus on the Northern California Landscape, and on the interface between the built environment and the natural environment. Or, rather, on the interface between the artificial built environment and the artificial natural environment.

Bay Raitt, 2019

Here, an ML model has been trained to understand the transformation from line drawings to a whole range of objects: from flowers to patterned dresses. Deploying this model in the service of a creative design tool, Nono Martinez Alonso demonstrates the potential of computer-assisted drawing interfaces.